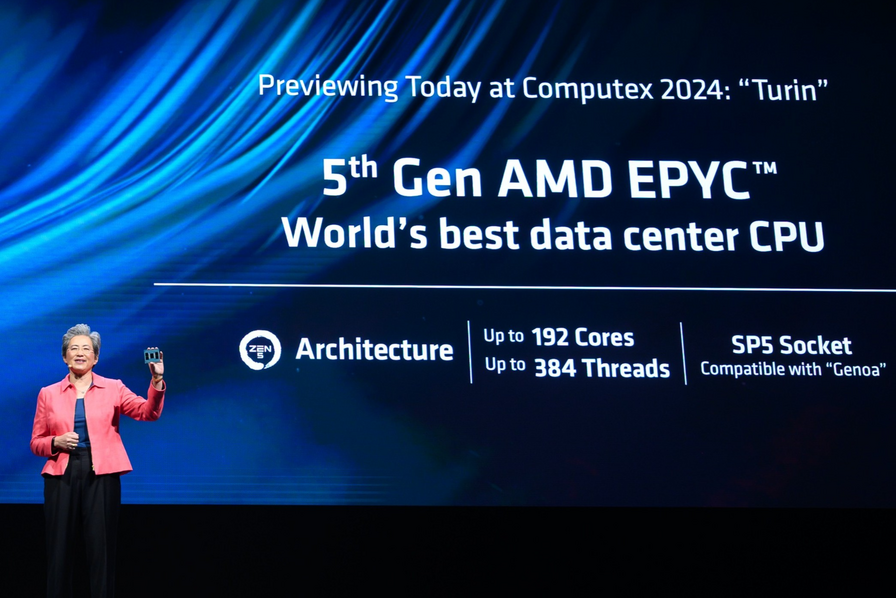

At today’s Advancing AI event in San Francisco, AMD unveiled its latest AI and high-performance computing solutions, including a new line of Instinct accelerators and EPYC processors. Lisa Su, President and CEO of AMD, has lifted the veil on all the products the company has been working on in recent months, starting with the EPYC 9005 series processors.

Built using AMD’s “Zen 5” architecture, these CPUs deliver record-breaking performance and power efficiency, the company asserts. The EPYC 9005 series features the 64-core EPYC 9575F processor, designed specifically for GPU-based AI solutions that require the best capabilities of the host processor.

Wizard designed for Meta’s Llama 3.1 models

With a frequency of up to 5GHz, compared to the competing processor’s 3.8GHz, it delivers up to 28% faster processing, which is essential for feeding data to GPUs for demanding AI workloads. In small and medium-sized generative AI models, such as Meta’s Llama 3.1-8B, the EPYC 9965 delivers 1.9x better throughput performance than competitors, AMD asserts.

Initial uses have been seen at Dell, HPE, Lenovo, and Supermicro, to name a few. “Whether AMD powers the world’s fastest supercomputers, the largest enterprises, or the largest hyperscalers, we have earned the trust of customers who value performance, innovation and power efficiency.” Welcomes Dan McNamara, Senior Vice President and General Manager of AMD’s Server Division.

Instinctive accelerators are ready to support AI workloads

The other major announcement concerns the Instinct MI325X accelerators developed to support the next generation of large-scale AI infrastructures. The MI325X offers industry-leading memory capacity and bandwidth, with 256GB of HBM3E supporting 6.0TB/s providing 1.8x more capacity and 1.3x more bandwidth than Nvidia’s H200.

In AI models, these accelerators can make a difference: AMD says they can deliver up to 1.3 times the inference performance on the Mistral 7B at FP16, 1.2 times the inference performance on the Llama 3.1 70B at FP8 and 1.4 times the inference performance on Mixtral 8x7B. At FP16 of H200. These accelerators should “It was delivered to production in Q4 2024 and is expected to benefit from widespread system availability from a wide range of platform providers, including Dell, Eviden, Gigabyte, HPE, Lenovo, Supermicro and others starting in Q1 2025,” it said. Determines AMD.

Following the pace of the annual roadmap, Lisa Su also previewed the MI350 series. Based on the CDNA 4 architecture, these accelerators can deliver a 35x improvement in inference performance compared to AMD CDNA 3-based accelerators, as promised by the manufacturer. These accelerators are expected to be available in the second half of 2025, and AMD Instinct MI400 series accelerators are planned for release in 2026.

The company is expanding its networking solutions portfolio

Meanwhile, AMD unveiled the Pensando Salina data processing unit and Pensando Pollara 400 network card. Both focus on maximizing AI infrastructure performance, and will help optimize data flows and GPU communications for efficient, scalable AI systems. The company plans to test them with customers in the last quarter of this year to ensure their availability in the first half of 2025.

A market estimated at $500 billion by 2028

Lisa Su was confident about AMD’s position in the AI market: “Going forward, we expect the data center AI accelerator market to reach $500 billion by 2028. We are committed to delivering open innovation at scale through our comprehensive silicon-scale solutions, software, networks and stacks.”

The manufacturer made a notable show of strength by inviting technical directors from Cohere, Google DeepMind, Meta, Microsoft, and OpenAI to the stage so they could demonstrate how they use ROCm software to deploy models and applications on Instinct accelerators.

Major players in the field of artificial intelligence testify

As a reminder, since its launch in December 2023, Instinct MI300X accelerators have been widely deployed by leading cloud, OEM and ODM partners and serve millions of users on AI models and solutions every day. At the conference, Google highlighted how EPYC processors can power a wide range of AI instances, including “supercomputer AI,” a supercomputing architecture designed to maximize ROI for AI. The Mountain View giant also announced that virtual machines built on the EPYC 9005 series will be available in early 2025.

Oracle relies on AMD products to provide fast, energy-efficient computing and networking infrastructure for customers like Uber, Red Bull Powertrains, PayPal, and Fireworks AI. Databricks highlighted how its models and workflows perform on AMD Instinct and ROCm: Tests showed that the memory and compute capabilities of the Instinct MI300X GPUs contribute to a performance increase of more than 50% over the proprietary Llama and Databricks models.

Finally, Meta detailed how EPYC processors and Instinct accelerators power its compute infrastructure in AI deployments and services, with the MI300X serving all live traffic on the Llama 405B.